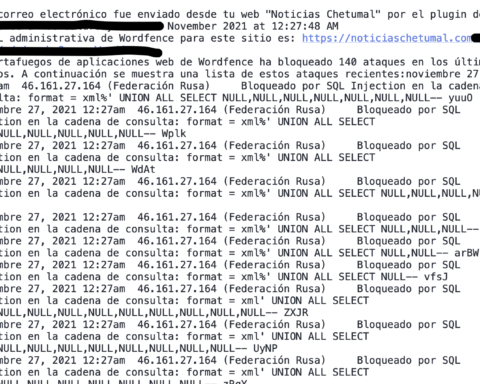

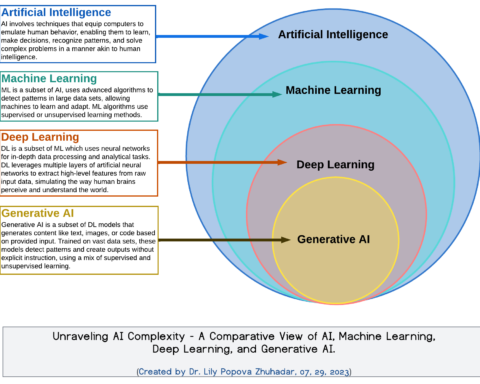

There’s a phrase from the early years of computer science in the late 1950’s that’s as perrenial as Moore’s Law: “garbage in, garbage out” or GIGO. If the input is rubbish, the output is also bunk. GIGO has plagued programmers for decades but two new areas of research known as “Machine UnLearning” and “Model Disgoregement” are attempting to solve the problem that researchers believe are behind its AI hallucinations or its LLM’s generating factually incorrect information.

The Austrian Data Protection Authority filed a privacy complaint against OpenAI this week for failing to correct false information on ChatGPT of a complainant. After the complainant requested that the incorrect data be corrected or deleted, OpenAI refused, giving the black box defense by arguing that it was unable to correct or delete incorrect information inside its mysterious LLM. Though this may be in violation of the stringent GDPR regulations that govern data and privacy in the European Union (such as Article 17: The Right to be Forgetten), OpenAI may not be playing coy when its mea culpa comes with the caveat that it won’t delete incorrect information because it can’t. The behaviours of LLM’s and Neural Networks in general emerge from the Machine Learning training process, as the models digest big data rather than being computer logic hardcoded by programmers. “As a result, in many cases the precise reasons why LLMs behave the way they do,” Nature wrote “as well as the mechanisms that underpin their behaviour, are not known — even to their own creators.”

Machine UnLearning became a vibrant area of research after the GDPR legislated its rules around the Right to be Forgotten but is also useful to redact and remove information such as copywrited material, PPI, and toxic content. Ken Liu’s got a fantastic primer on Machine UnLearning on the Stanford blog to get your head around some of the concepts such an “Unlearning” via differential privacy.

Disgorgement is a more recent beast developed by Amazon and explained in this academic paper AI Model Disgorgement: Methods and Choices, “One potential fix for training corpus data defects is model disgorgement — the elimination of not just the improperly used data, but also the effects of improperly used data on any component of an ML model. Model disgorgement techniques (“MD” hereafter) can be used to address a wide range of issues, such as reducing bias or toxicity, increasing fidelity, and ensuring responsible usage of intellectual property. Developers of large models should understand their options for implementing model disgorgement, and novel methods to perform model disgorgement should be developed to help ensure the responsible usage of data.”

Michael Kearns, professor of computer science at the University of Pennsylvania, notes that model disgorgement could, in theory, support GDPR, if OpenAI can find and delete bits of data that are causing ChatGPT’s errors. “The techniques we survey in our paper have tried to anticipate what might be meant by the GDPR when they say the right to be forgotten, because the GDPR makes it pretty clear that in certain circumstances a user should be able to raise their hand and say I want my data to be deleted from storage,” he said. “But the last time I checked, it’s silent on the question of what if a model was trained using your data?”