Gonzalez v. Google and Twitter v. Taamneh have more in common than the snappy alliteration of their case names. The facts in both cases concern content moderation and may affect the way major platforms such as Facebook, Wikipedia, Youtube deal with disagreeable content. The disagreeable content is some of the worst of the worst online: terrorist propaganda in the wake of the Paris attacks in France. This case arises from the same set of facts as Gonzalez v. Google:

Inc. v. Taamneh. (n.d.). Oyez. Retrieved February 24, 2023, from https://www.oyez.org/cases/2022/21-1496

Nohemi Gonzalez, a U.S. citizen, was killed by a terrorist attack in Paris, France, in 2015—one of several terrorist attacks that same day. The day afterwards, the foreign terrorist organization ISIS claimed responsibility by issuing a written statement and releasing a YouTube video.

Gonzalez’s father filed an action against Google, Twitter, and Facebook, claiming, among other things, all three platforms were liable for aiding and abetting international terrorism by failing to take meaningful or aggressive action to prevent terrorists from using its services, even though they did not play an active role in the specific act of international terrorism that actually injured Gonzalez. The district court dismissed the claims based on aiding-and-abetting liability under the Anti-Terrorism Act, and the U.S. Court of Appeals for the Ninth Circuit reversed.Twitter,

The piece of legislation that governs this disagreeable content is the U.S. Federal governments Section 230 of the Communications Decency Act, written 30 years ago, that gives broad protections for tech platforms. Republicans decry the law because they believe Section 230 gives social media giants the ability to censor conservative viewpoints. Democrats, on the other hand, argue that Section 230 bulletproofs these tech giants from accountability. The left leaning ACLU (American Civil Liberties Union), however, writes “Section 230 promotes free speech by removing strong incentives for platforms to limit what we can say and do online.” Battle lines are drawn but the Supreme Court’s conservative majority means it could rule against the tech giants.

The Questions to be considered by the U.S. Supreme Court:

1. Does an internet platform “knowingly” provide substantial assistance under 18 U.S.C. § 2333 merely because it allegedly could have taken more “meaningful” or “aggressive” action to prevent such use?

2. May an internet platform whose services were not used in connection with the specific “act of international terrorism” that injured the plaintiff still be liable for aiding and abetting under Section 2333?

I personally wonder how it will affect the Christchurch Call, initiated by New Zealand’s former prime minister Jacinda Ardern with help of French president Emmanuel Macron, in the wake of the terrorist hate crime on a mosque in Christchurch in which the killing of 51 worshipers was streamed over platforms like Youtube. In the wake of the terrorist attacks, the French Ministry of Foreign Affairs wrote: “French President Emmanuel Macron and New Zealand Prime Minister Jacinda Ardern have mobilized a group of heads of state and government, international organizations and leaders of businesses and digital organizations to take action against terrorist and extremist content online and end the exploitation of the Internet by terrorist actors.”

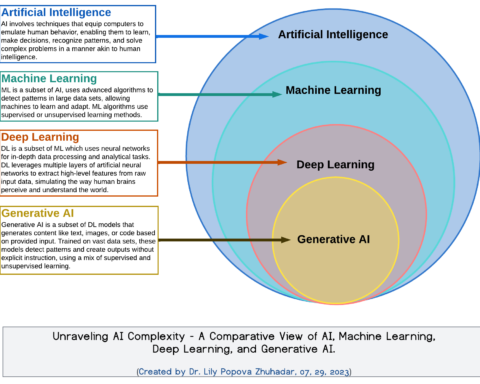

The ChristChurch Call has also been picked up by tech giants such as Microsoft, who have expanded their commitment to countering violent extremism online: “These new commitments are part of our wider work to advance the responsible use of AI and are focused on empowering researchers, increasing transparency and explainability around recommender systems, and promoting responsible AI safeguards. We’re supporting these commitments with a new $500,000 pledge to the Christchurch Call Initiative on Algorithmic Outcomes to fund research on privacy-enhancing technologies and the societal impact of AI-powered recommendation engines.”