A good editor wears multiple hats. There’s the hard hat for the factory floor where the editor is in charge of quality control. They need to know their audience and pick story pitches that fit the publication. The story is like unprocessed ore to be refined or rejected, tweaked on the assembly line or ripped apart for spare parts and reworked if it can indeed be salvaged at a latter date. Then an editor needs to put on his Hemmingway “Oysterman hat” which facilitates the removal of superfluous lines and extraneous material that doesn’t contribute to the story. Finally, there’s the Sherlock Holmes “Deerstalker hat” accompanied with mandatory tobacco pipe that an editor wears in the final phase of editing whereby facts are checked and the story gets the final tick of approval before the publish button is pressed.

ChatGPT fails abysmally at wearing deerstalker hat. According to the Press Gazette, the feedback from an editor that “hired” raised enough alarm bells the agency subsequently ¨fired” ChatGPT and scrap its experiment with AI for publishing. ChatGPT’s first assignemnt sourced an article in Spanish on the mugging of an elderly woman at a cash machine in Buenos Aires with this prompt: “Create news story using this source material, add names, place, when it happened: https://tn.com.ar/policiales/2023/02/27/video-un-ladron-le-pego-una-trompada-a-una-jubilada-cuando-estaba-sacando-plata-de-un-cajero-automatico/.”

Consequently, ChatGPT got wrong the following:

- You got the name of the victim wrong

- The age of the victim wrong

- The location of the crime wrong

- The description of the video didn’t match the footage

- Said the perpetrator was unidentified when we have a name and an age

- Said the perpetrator was at large when he was arrested

- Appear to have fabricated a quotation by the mayor of Buenos Aires

- Got the name of the mayor of Buenos Aires wrong

- Appear to have fabricated a quotation by the victims’ children.

Having worked for years in the Latin America, this may be because ChatGPT no habla espanol muy bien. This is not, however, been an isolated incident. Newsrooms that are cutting costs and firing competent editors best beware of hiring a robot that is not ready to fill the void.

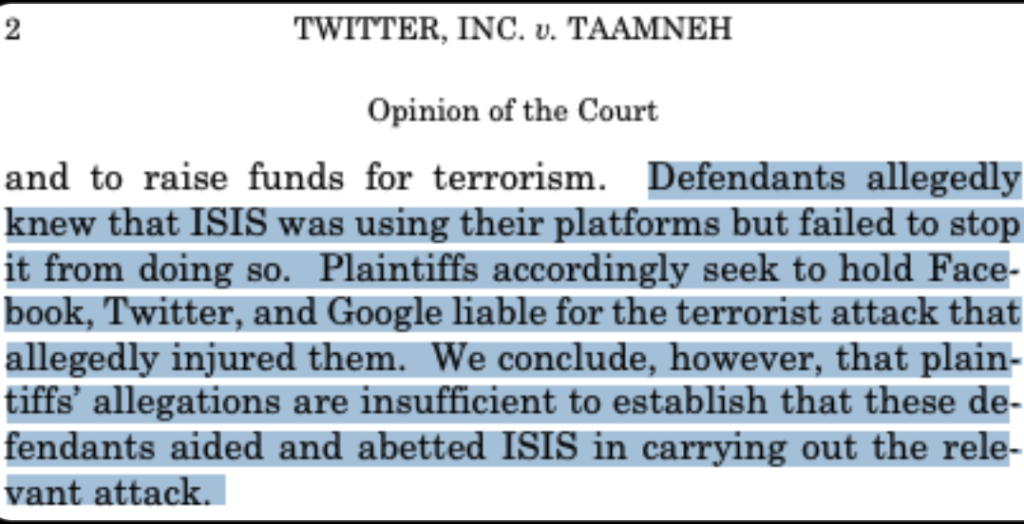

The next big issue on the horizon is whether publishers, already facing catastrophic losses to their coffers after sustained assaults from Silicon Valley, “have a right in law to charge ChatGPT for analysing and exploiting their content is a moot point. News Corp certainly thinks they do but legal opinion is far from set on this. Unless new legislation specifically addresses the issue, it may take a test case to decide.”